How I Set Up CI/CD Pipeline for Cloudflare Worker

Today, most of my projects on GitHub are kept up to date with Renovate. With auto-merging enabled, I want to have enough confidence that the automated dependencies update would not cause any regressions.

Testing Cloudflare Worker is a bit out of whack. Don’t get me wrong, I love Cloudflare Worker. However, the existing solution out there feels a little bit unintuitive. On top of that, dollarshaveclub/cloudworker is no longer actively maintained, which is a bummer.

TL;DR: How to set up a CI/CD pipeline for a Cloudflare Worker project using GitHub Action and Grafana k6.

Overview

Previously, I built a URL shortener clone with Cloudflare Worker. Using this existing project (GitHub link), we shall look into setting up a CI/CD pipeline for it along with simple integration tests.

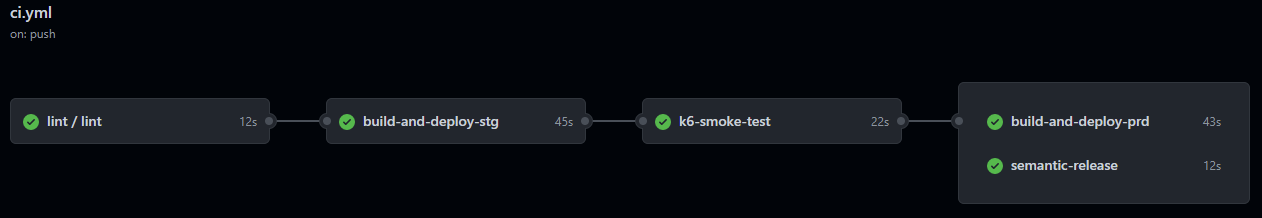

Our GitHub Action CI/CD pipeline is rather straightforward. The stages (jobs) are as follows:

- Lint check or unit testing

- Deploy to the Staging environment

- Run the integration tests on our Staging environment

- Run semantic release and deploy to the Production environment

Wrangler Config

To start, we’ll have to modify our existing wrangler.toml file. Remember, we need a Staging environment to deploy to run our integration test against it:

[env.staging]

name = "atomic-url-staging"

workers_dev = true

kv_namespaces = [

{ binding = "URL_DB", id = "ca7936b380a840908c035a88d1e76584" },

]

Here are the things to take note of:

name— Make sure that thenamehas to be unique and alphanumerical (-are allowed) for each environment. Let’s name our Staging environmentatomic-url-staging.worker_dev— Our integration tests run on<NAME>.<SUBDOMAIN>.workers.devendpoint. Hence, we need to deploy our Cloudflare Worker by settingworkers_dev = true(reference).kv_namespaces— Atomic URL uses KV as its database to store shortened URLs. Here, I chose to use a preview namespace KV as the test database. Why? Simply because it is the same development KV namespace that I use during local development (when runningwrangler dev). Of course, you could just use a regular KV namespace. Just make sure that you’re not using Production KVid. Read how to create a KV namespace.

Next, we’ll also need to create a Production environment to deploy to with our production KV namespace:

[env.production]

name = "atomic-url"

route = "s.jerrynsh.com/*"

workers_dev = false

kv_namespaces = [

{ binding = "URL_DB", id = "7da8f192d2c1443a8b2ca76b22a8069f" },

]

The Production environment section would be similar to before except — we’ll be setting worker_dev = false and route for production.

Deployment

To deploy manually from your local machine to their respective environment, run:

wrangler publish -e stagingwrangler publish -e production

Though, we’ll look into how to do this automatically via our CI/CD pipeline using GitHub Actions.

Before we move on, you may find the complete wrangler.toml configuration here.

Oh, here’s a cheat sheet to configure wrangler.toml. I highly recommend you make use of this!

Grafana k6

For integration testing, we’ll be using a tool known as k6. Generally, k6 is used as a tool for performance and load testing. Now, bear with me, we’re not going to integrate any load tests into our CI/CD pipeline; not today.

Here, we’ll be running smoke tests for this project whenever new commits are pushed to our main branch. A smoke test is essentially a type of integration test that performs a sanity check on a system.

In this case, running a smoke test is sufficient enough for me to determine that our system is deployed without any regression and can run on minimal load.

What to test

Basically, here are a couple of things that we want to check as part of our smoke test for our URL shortener app in groups:

- The main page should load as expected with a 200 response status

group('visit main page', function () {

const res = http.get(BASE_URL)

check(res, {

'is status 200': (r) => r.status === 200,

'verify homepage text': (r) =>

r.body.includes(

'A URL shortener POC built using Cloudflare Worker'

),

})

})

- Our main POST API endpoint

/api/urlshould create a short URL with the original URL

group('visit rest endpoint', function () {

const res = http.post(

`${BASE_URL}/api/url`,

JSON.stringify({ originalUrl: DUMMY_ORIGINAL_URL }),

{ headers: { 'Content-Type': 'application/json' } }

)

check(res, {

'is status 200': (r) => r.status === 200,

'verify createShortUrl': (r) => {

const { urlKey, shortUrl, originalUrl } = JSON.parse(r.body)

shortenLink = shortUrl

return urlKey.length === 8 && originalUrl === DUMMY_ORIGINAL_URL

},

})

})

- Make sure that when we visit the generated short URL, it redirects us to the original URL

group('visit shortUrl', function () {

const res = http.get(shortenLink)

check(res, {

'is status 200': (r) => r.status === 200,

'verify original url': (r) => r.url === DUMMY_ORIGINAL_URL,

})

})

Finally, we configure out BASE_URL to point to our newly created Staging environment in the previous section.

To test locally, simply run k6 path/to/test.js. That’s all! You may find the full test script here.

In case you are thinking about running load tests, read how to determine concurrent users in your load test.

GitHub Actions

I’ll be glossing over this section as it is pretty straightforward. You may refer to the final GitHub Actions workflow file here.

Let’s piece together everything we have. Below are the GitHub Actions that we’ll need to use:

- wrangler-action — For deploying Cloudflare Worker using Wrangler CLI

- Action — For running k6

One thing to note about the workflow file — to make a job depend (need) on another job, we’ll make use of the need syntax.

Actions Secrets

This project requires 2 Action secrets:

CF_API_TOKEN— To be used by Wrangler GitHub Action to automatically publish our Cloudflare Worker to its respective environment. You can create your API token using theEdit Cloudflare Workerstemplate.NPM_TOKEN— This project also uses semantic-release to automatically publish to NPM. To enable this, you will need to create aNPM_TOKENvia npm create token.

To add it to your GitHub repository secrets, check out this guide.

If you had taken a look at the final workflow file, you may have noticed the syntax ${{ secrets.GITHUB_TOKEN }} and wondered why I didn’t mention anything about adding GITHUB_TOKEN to our project Actions secrets. Turns out, it is automatically created and added to all of your workflows.

Closing Remark

Understandably, serverless platforms are generally known to be hard to test and debug. However, that doesn’t mean that we should ignore it.

So, what’s next? Right on top of my head, we could do better. Here are a couple of improvements that we can make:

- Add a job/stage that automatically rolls back and reverts commit when the smoke tests fail

- Create an individual testing environment upon PR creation so that we can run smoke tests on them

- Probably overkill for this project — implementing canary deployment sounds like a good challenge

References

If you’re looking into unit testing Cloudflare Workers, here are my recommendations:

- findwork.dev/blog/testing-cloudflare-workers/

- blog.cloudflare.com/unit-testing-workers-in-cloudflare-workers/

- blog.cloudflare.com/unit-testing-worker-functions/

Here’s a decent video about setting up an ideal yet practical CI/CD pipeline: