How I Made a Chrome Extension To Help With Rephrasing

I decided to teach myself how to create a Chrome extension and a little bit about running LLM directly in web browsers. It was quite a fun and interesting experience, and I thought I wanted to jot down my thought process and whatever I’ve learned.

Why I Made This

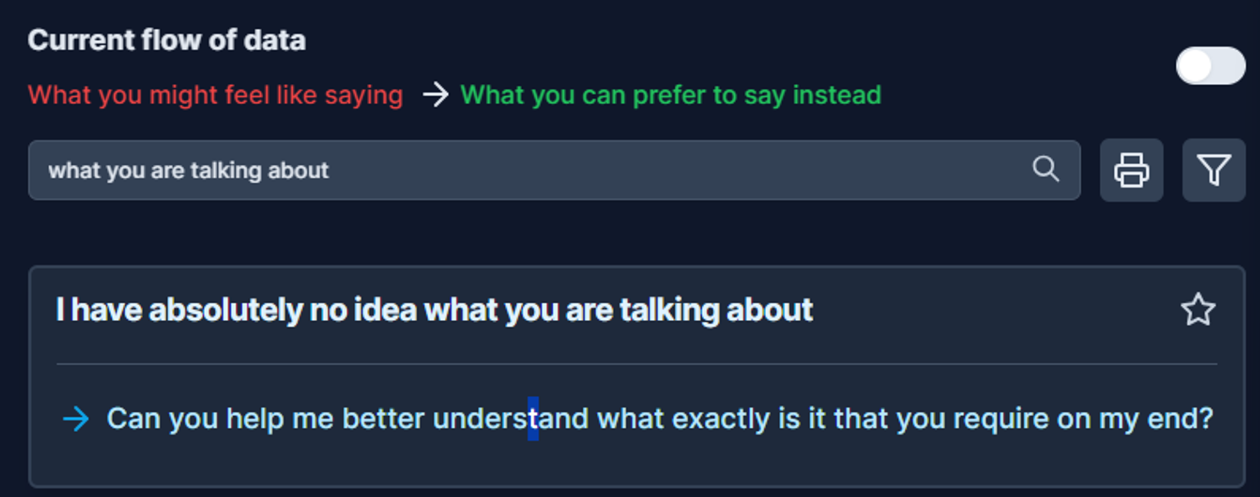

I occasionally find myself needing to change the casing of my sentences or rephrase short sentences to sound more professional, polite, or casual, depending on the context

Typically, I would search for "how to professionally say <something>" or copy and paste the text into another tool, manually prompt it to make the desired changes, and then copy the revised text back to its original location.

This is a bit of a hassle for me for N * MyEntireLifetime.

Then I thought, wouldn't it be much more convenient to have an extension that could do just this from where I was typing?

Instead of switching between multiple tools, I could just highlight the text I wanted to change and use a to rephrase (and replace) it instantly.

Features I Want in My Extension

Here are the features that I want for my extension:

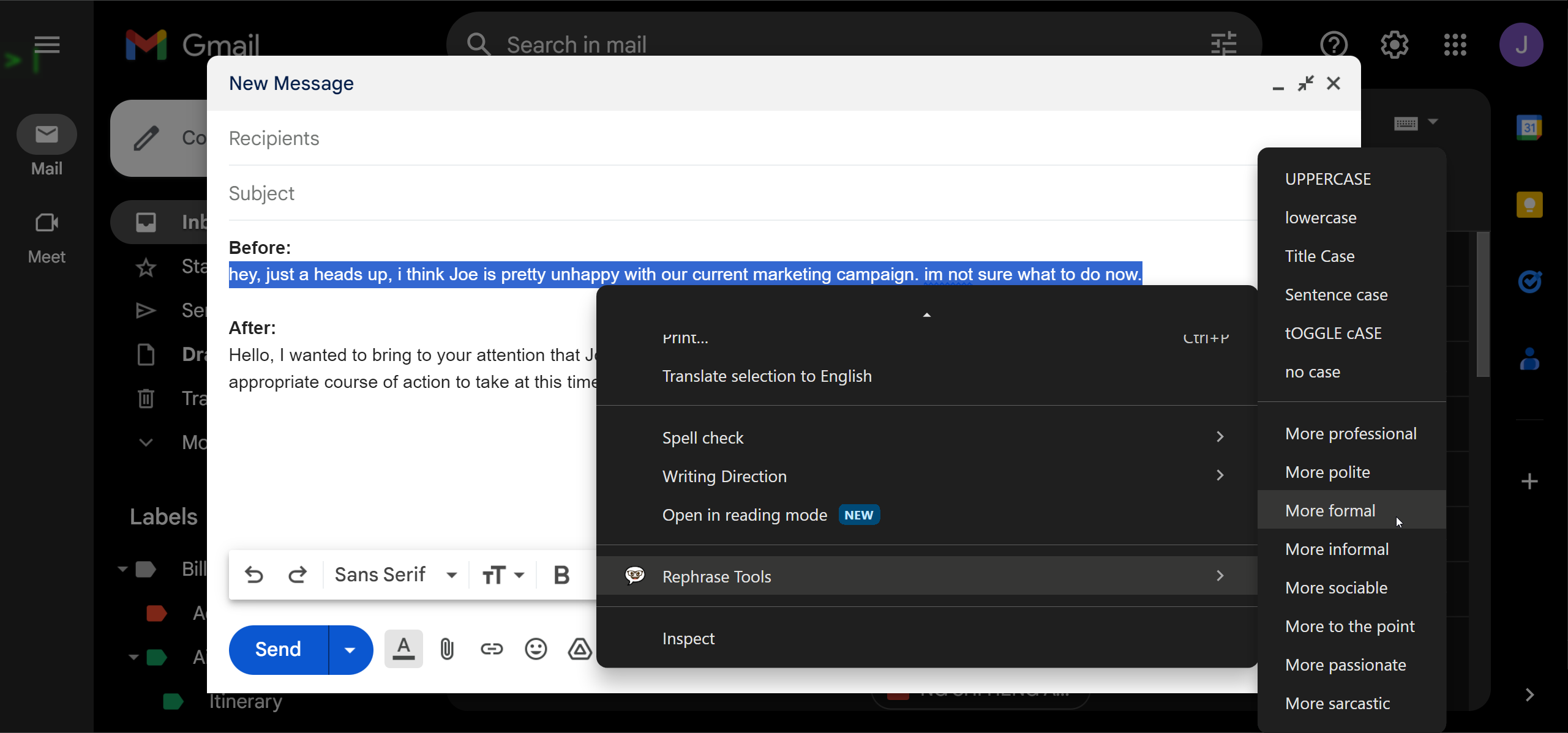

- Change the case of the text (e.g. Title Case, UPPERCASE, lowercase)

- Change the tone of text (e.g. making it sound more professional or casual or anything in between)

- Should work almost everywhere on the Chrome browser, e.g., Notion, WhatsApp, Gmail, etc.

- Support keyboard shortcuts and a context menu for easy access

What Did I Learn

Most of my past experiences/projects have been more focused on backend development. Working with client-side development like manipulating the browser DOM has always been an area where I feel less self-assured. So, creating a Chrome extension seemed like the perfect chance to mess around with client-side code.

The making of a Chrome Extension

manifest.json

src

|-- background.js

|-- content.js

|-- popup.html

|-- popup.js

First off, I discovered that making a Chrome extension is actually simpler than I initially thought. The key concept is pretty straightforward.

The main components are basically just these 4:

- Content script (e.g.

content.js): This helps you interact with the browser DOM - Background script; service worker (e.g.

background.js): This lets you run things in the background manifest.jsonfile: This turns your JS, HTML, and CSS files into a Chrome extensionpopup.js+popup.html(optional): This is for the little popup you see when you click the extension icon

It's that simple!

Recurring cost

Since part of the feature helps you convert your tone, like being more professional, it currently calls the OpenAI API for this. This means it costs me money to keep this alive. If this grows (unlikely I know), I’m wary about keeping up with any growing cost; I'm probably just overthinking.

My previous spam attack experience after hosting a URL shortener has made me a little wary about putting things online. The last thing I want is to have my side project unexpectedly rack up a large bill.

Why LLM in the browser

I spent weeks thinking about ways to make this economically viable. One idea I had was to see if I could run LLMs directly on the client side (web browser). The appeal of running LLMs in the browser is that they run locally, which means it's much cheaper since I won’t need a server.

It also offers privacy, as no data leaves the user's browser.

Exploring LLMs

Here are the 2 notable projects that I experimented with to see if they could be integrated into my extension. These projects enable running LLMs natively in web browsers by leveraging WebGPU:

Experiments and numbers

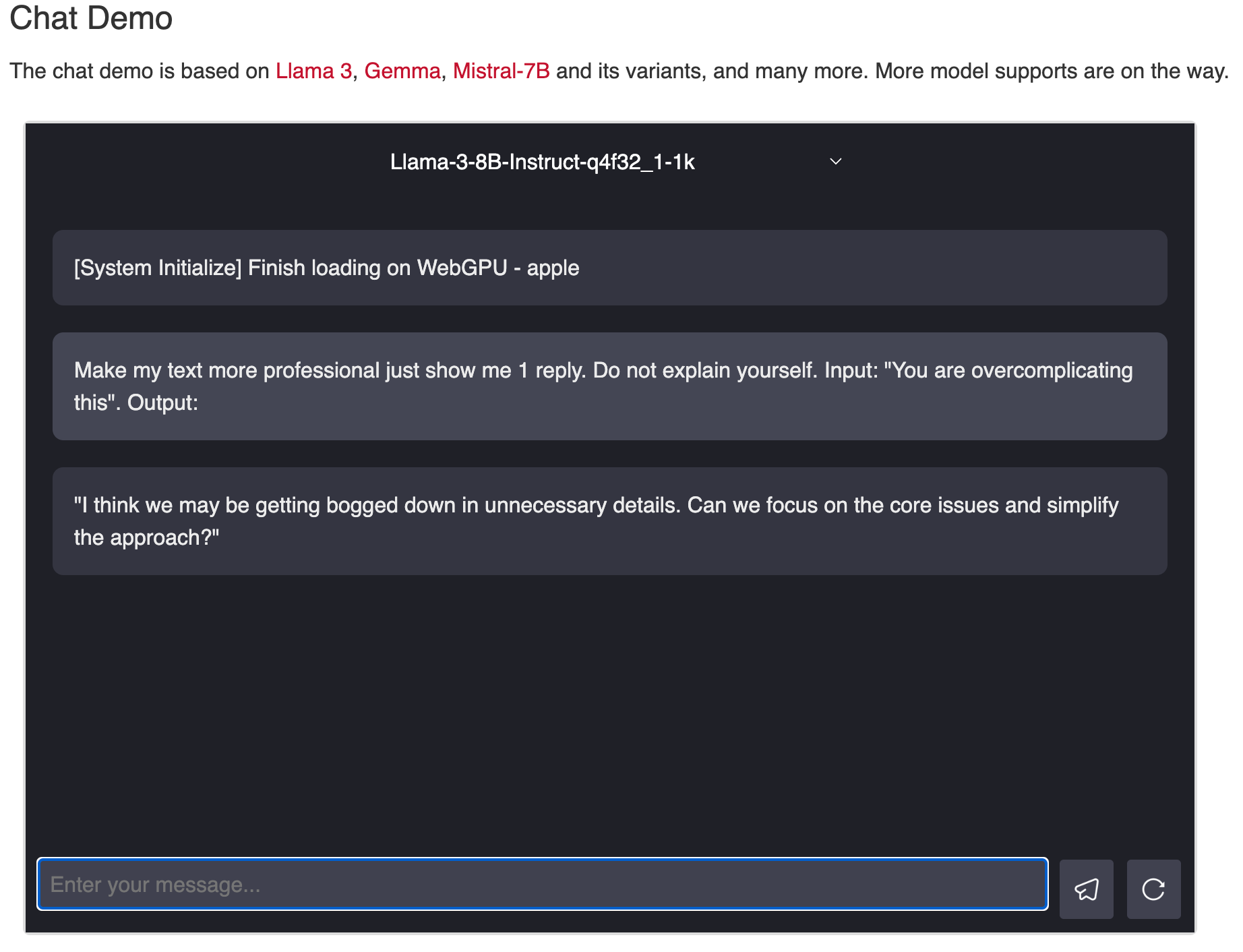

I tested out the WebLLM demo using the Llama-3-8B-Instruct-q4f32_1-1k model with an Apple M1 Pro Chip.

It took me about 116s (10s if fetched from cache) to initialize (~4GB downloads), which felt okay if I only did this once. After that, the model takes roughly about 3s to 4s to respond, which is surprisingly fast!

Having that said, I experienced a significantly worse result when repeating my experiment on my older Dell XPS 13 7390 (with an integrated GPU). The initial loading from the cache took about 40s whereas responding often takes more than 70s! Oof.

While browser LLMs are cool, I feel they aren't good enough for widespread public use yet. I ultimately decided not to integrate them into the current version of Rephrase Tools as I won’t be able to provide a decent UX (i.e. sub 2-3 second init and response times, no crashes, no lag). It’s just not practical to expect most users to have a somewhat decent machine, much less with a GPU.

Charging money

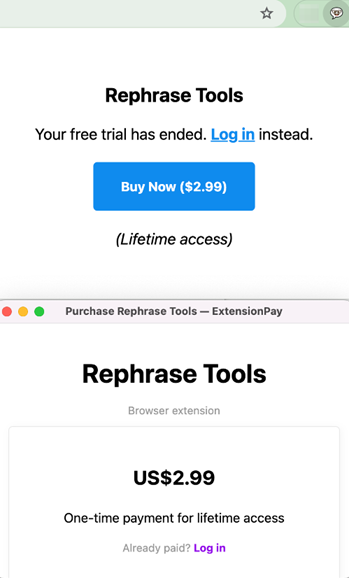

Based on my past experience, enforcing signups/charging a fee (even a small one) can act as a deterrent against spam by making it less economically viable for spammers.

At the end of 2023, I wanted to make something that is able to generate some form of revenue every year. Last year, I made a small add-on that runs on a subscription model. Quite frankly, I'm not a fan of the subscription model; I personally prefer the good ol' buy-once-use-forever model. Plus, the logic for the latter is much easier to manage!

Hanging out on Hacker News, I found ExtPay, a neat tool made to monetize browser extensions. It's pretty easy to use!

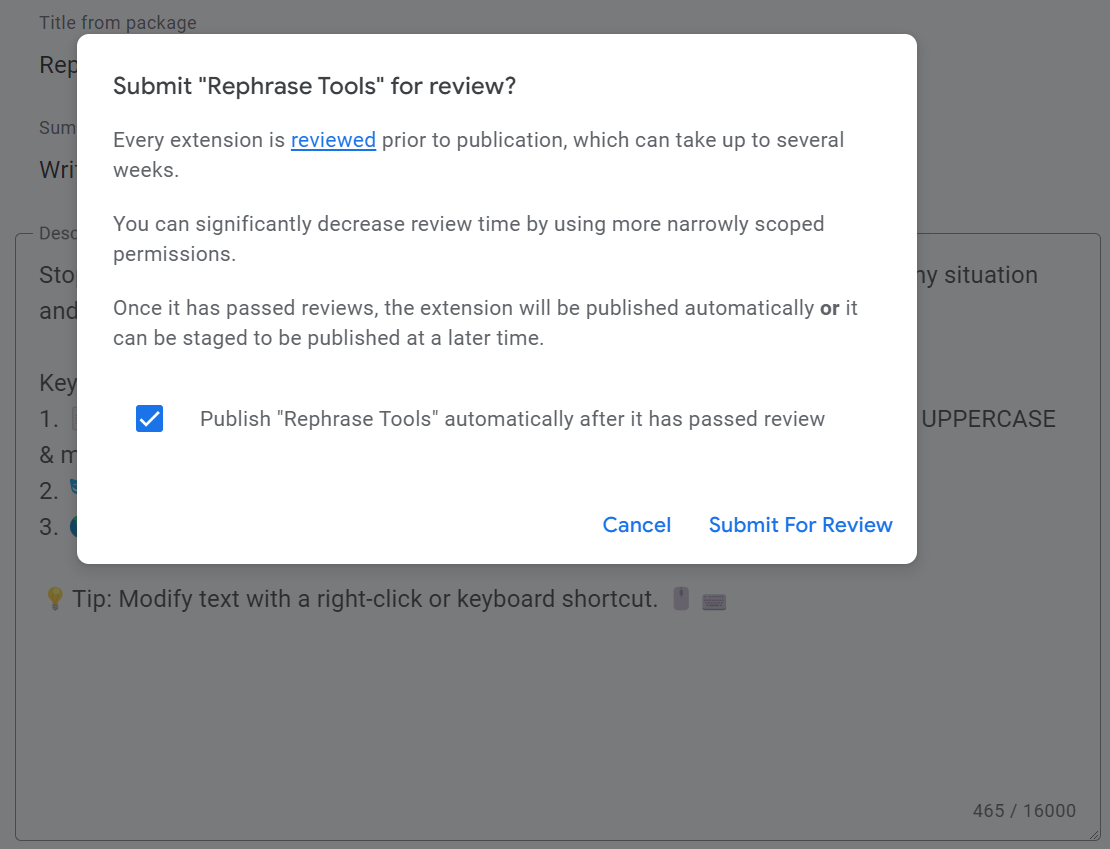

Publishing the extension

Finally, publishing a Chrome extension is super easy.

The developer dashboard website guides you through it, and it's much simpler than publishing a Google Workspace add-on (which I did before). However, you do need to pay a one-time developer registration fee of $5 to verify your account and publish items.

You can publish the extension as "unlisted" so only you and your friends/family can use it. This crossed my mind as I didn't want to make it public at first because I was afraid of spam and unexpected costs.

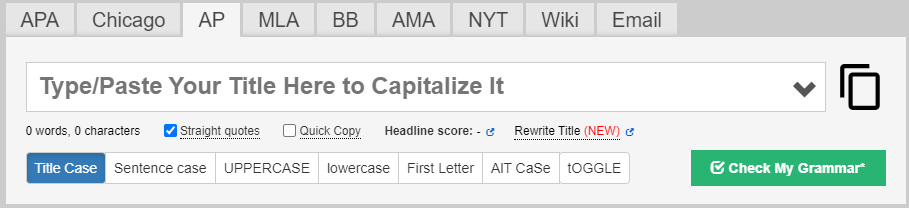

Extra: Nuances of Title Casing

Did you know there are so many styles of title casing in the world? Like, AP, APA, Chicago style, etc. I initially wanted to support all of these but couldn't find a reliable/battle-tested library on GitHub for it.

I wanted to create a library myself but didn't have the time, so I gave up. I just do regular title cases that work just like some_string.title() in Python.

Maybe this is a problem I'll attempt to solve some other time.

Closing Thoughts

And that’s it! That's the journey behind the creation of Rephrase Tools, a Chrome extension made to simplify the process of rephrasing the tone of your writing.

If you're curious about trying out Rephrase Tools, you can start a free 7-day trial without entering any credit card information. Feel free to give it a spin!

When people ask me about topics related to LLM, I'm usually pretty meh about it. But now, I'm actually really excited about the potential for browser-based LLM to grow and develop. I think they show a lot of promise for the future.

Ideas for the Future

Here are some things I might work on next:

- Incorporate semantic-release-chrome plugin in my CI/CD pipeline to publish Chrome extensions automatically

- Make the extension work on Firefox too

- Checking out extension.js - I found this a bit later! If I were to start another browser extension project, I'd definitely give this a try. Alternatively, there’s also Plasmo which is a full-fledged framework for building browser extensions

- Rebuild the entire thing to use browser-based LLM as they mature