How I Saved Scraped Data in an SQLite Database on GitHub

Some time back, I stumbled upon this amazing idea of Git scraping made popular by Simon Willison. It involves using GitHub Actions to scrape data and save it directly into a Git repository. Essentially, for each new set of data scraped, a new commit is created in your repository.

The beauty of this is that you don’t need an application or database server since the VCS host (e.g. GitHub) runs and stores everything for you (for free!). Plus, Git inherently offers historical records of the data. Each commit serves as a time-stamped snapshot, enabling you to track data changes over time.

This is absolutely brilliant! I knew I had to try it.

“Drawbacks” of Git scraping

After trying it myself, one downside (although not a big one) is that you'll end up with a lot of Git commits over time depending on how often your job runs.

Also, querying data from Git commits and history is much more annoying than from a regular database like Postgres or SQLite.

Embracing SQLite

Around this time, I was also experimenting with SQLite. I usually used CSV or JSON to store data for small projects. However, I realized that SQLite has many advantages over these file formats. If I need the data in CSV format (which most people outside tech prefer), I can easily convert it to CSV anyway.

Moreover, having your database in a file format (like *.db, *.sqlite3) has many benefits. For example, you can store it anywhere easily — on Google Drive, Dropbox, AWS S3, or even storing it in Git.

The Idea: "GHActions-Scraping"

One day, it hit me:

"What if I could store the scraped data in an SQLite database within GitHub Artifacts?"

This way, I could run a scraping job periodically using GitHub Actions while keeping my data in a proper database like SQLite, stored on GitHub Artifacts instead of, say, AWS S3.

Here's what I had in mind:

With this setup, I can run the entire scraping system without needing a dedicated server (at least not in the traditional sense of a self-managing one).

Proof of Concept

To test this out, I needed an idea to prove what I proposed. I ended up adapting work from simonw/ca-fires-history.

For this, I’ve written a very simple Python script to fetch fire incident data from the California Department of Forestry and Fire Protection web API and store it in SQLite. Next, we need to write a GitHub Actions workflow file. Let's call it scrape.yml.

Requirements

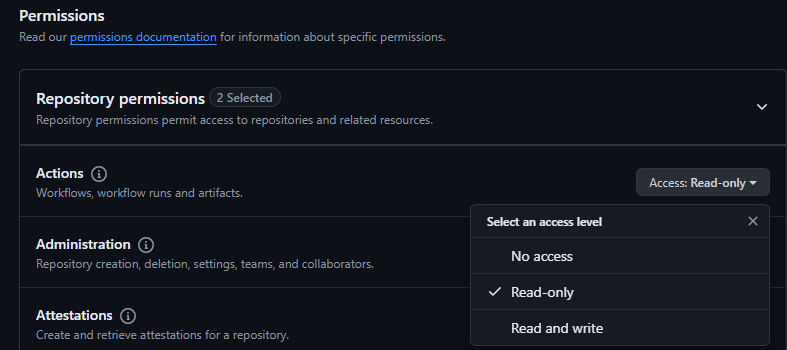

- We need to create a fine-grained access PAT token with Action (Read-only) permission

- Then we need to store the token under the repository's

Actions secrets and variablessettings

actions/download-artifact@v4 step so that we can download artifacts (which is our SQLite DB) from our last workflow runHere's how to create a scrape.yml containing the jobs and steps that mimic what we’ve drawn above. I've added some notes to the important parts:

name: Scrape latest data

on:

push:

workflow_dispatch: # This allows us to trigger manually from the GitHub Actions UI

schedule:

- cron: "6,26,46 * * * *" # Scheduled to run 3 times/hour (at minute 6, 26, and 46)

jobs:

scheduled:

runs-on: ubuntu-latest

steps:

- name: Check out this repo

uses: actions/checkout@v4

- name: Set up python

uses: actions/setup-python@v5

with:

python-version: 3.11

# Step to get the latest artifact run ID

# Fetch the latest artifact run ID using GitHub API and jq

# Save the run ID as an environment variable

# If your repository is set to private, an OAuth app token or personal access token (classic) with repo scope is required

- name: Get latest artifact run id

run: |

ARTIFACT_RUN_ID=$(curl -s "https://api.github.com/repos/${{ github.repository }}/actions/artifacts?per_page=1" | jq '.artifacts[0].workflow_run.id')

echo "artifact_run_id=$ARTIFACT_RUN_ID" >> $GITHUB_ENV

# Download the artifact (our SQLite DB!) from the last run

- name: Download artifact

uses: actions/download-artifact@v4

with:

name: ca-fires-history-db

path: ./data/

run-id: ${{ env.artifact_run_id }} # Run ID of the artifact (SQLite DB) uploaded from the last run

github-token: ${{ secrets.GH_PAT }} # REQUIRED. See https://github.com/actions/download-artifact?tab=readme-ov-file#download-artifacts-from-other-workflow-runs-or-repositories

continue-on-error: true # Set this to false after the first run

- name: Display downloaded file

run: ls data/

- name: Run scrape.py

run: python3 scrape.py

- name: Upload updated artifact

uses: actions/upload-artifact@v4

with:

name: ca-fires-history-db # Name of the artifact to upload, make sure to match the name in the download step

path: ./data/fires.db

if-no-files-found: errorRemember to create a new repository secret name GH_PAT at github.com/username/repository/settings/secrets/actions!

With this, we have an entire automated web scraping system where the data is collected periodically and stored in an SQLite database – all these within the GitHub Actions ecosystem!

Caveats

Long-running jobs

In my experience, this setup is great for handling smaller, shorter-running jobs. However, it doesn't work well for longer tasks if you're sticking with the free tier. You might end up seeing your runners getting killed or running out of memory or CPU if the job is too intensive.

90 days retention limit

As of now, GitHub Artifacts are retained for a maximum of 90 days for public repositories before they are automatically deleted (reference).

However, as our scraper downloads our SQLite database before each job, updating it, and re-uploading it as a new artifact more often than every 90 days. This means we won't lose any of the data collected from previous runs.

Artifacts upload limit

Watch out for the upload limit on artifacts. GitHub doesn't specify a strict limit, but discussions suggest it's around 5GB (2019).

One idea is you can consider setting up a Renovate Bot. It can help you with automated dependency updates and keep your project "active" so you won't have to worry about your workflow getting disabled.

Overall, I wanted to share that this setup has required minimal oversight and I am super happy about it!

Bonus: Visualizing our data with Datasette

With our data stored in an SQLite database, we can easily visualize or interact with it using Datasette, which is another tool I recently fell in love with.

Datasette is super easy to use. It allows you to easily publish data and share it with the world. If your site usage is low, you can even run it for free on Vercel.

To start, create a Vercel API token under Account Settings.

Then, we simply have to append the following step at the very end of our previous scrape.yml workflow:

# ... (omitted for brevity)

- name: Install datasette

run: |

pipx install datasette

- name: Deploy to vercel

env:

VERCEL_TOKEN: ${{ secrets.VERCEL_TOKEN }} # Vercel token for authentication

run: |-

datasette install datasette-publish-vercel

datasette publish vercel data/fires.db --project=cafireshistorydb --install=datasette-hashed-urls --install=datasette-cluster-map --token="$VERCEL_TOKEN" --metadata data/metadata.json --setting allow_download off --setting allow_csv_stream off --extra-options "-i"

Remember to create another new repository secret name VERCEL_TOKEN

Datasette also has a bunch of plugins that you can use right out of the box! Here, I use the datasette-cluster-map plugin to visualize fire incidents on a map! It’s pretty cool!

You can find the complete version of our scrape.yml file here on GitHub.

Closing Thoughts

That's it! Unlike Git scraping, GHActions scraping doesn't create a new Git commit for each new piece of data. Instead, we store the data in an SQLite database (within GitHub Artifacts), download it in the next run, add/update rows as needed, and then re-upload it for future runs.

Is this approach scalable? Probably not. But after keeping it running for a few weeks, I really think it requires very little to zero effort to maintain! It really depends on the scale that you're running. If your data is in the range of gigabytes or petabytes, you might want to stick with other solutions.

With that said, I had a lot of fun experimenting and building this. Cheers!