Backing Up Ghost Blog in 5 Steps

I’ve been writing on my self-hosted Ghost blog for some time now. In case you’re wondering, this site is hosted on a Digital Ocean Droplet.

For the most part, I felt like I was doing something inconsequential that only meant much to me. Today, the site has grown to a size that it’d feel like a hat-flying slap to my face if I were to lose all my data.

If you’re looking for the answer to "How do I back up my Ghost blog?" for your self-hosted Ghost blog, you’ve come to the right place.

TL;DR: How to backup self-hosted Ghost blog to cloud storage like Google Drive or Dropbox and how to restore it using Bash

Context

Getting started with Ghost is easy. You would typically pick between:

- Ghost (Pro) managed service

- Self-hosted on a VPS or serverless platform like Railway

I’d recommend anyone (especially non-developers) to opt for the managed version.

Yes, it’s relatively more expensive; so is every managed service. However, it’d most likely save you a bunch of headaches (and time) that come along with self-hosting any other sites:

- Backups

- Maintenance

- Downtime recovery

- Security, etc.

In short, you’d sleep better at night.

On top of that, 100% of the revenue goes to funding the development of the open-source project itself — a win-win.

“Uh, Why are you self-hosting Ghost then?”

- Price — nothing beats the price affordability of hosting on your dedicated server. Today, I am paying about $6/mo for a 1 vCPU and 1 GiB memory Droplet.

- Knowledge gain — I’ve learned a lot from hosting and managing my own VPS.

Other perks of self-hosting include customizability, control, privacy, etc. — which are great, albeit not my primary reasons.

Most importantly, all the hassles above of self-hosting came to me as fun.

Until it isn’t, I guess.

The pain of backing up Ghost

Setting up Ghost on Digital Ocean is as easy as a click of a button. Yet, there isn’t any proper in-house solution to back up your Ghost site.

From Ghost’s documentation, you can manually back up your Ghost site through Ghost Admin. Alternatively, you could use the ghost backup command.

Even so, database backup was not mentioned as of the time of writing this. I really wish they'd talk about this more.

Backing up with Bash

Why pick Bash?

Simplicity. Plus, Bash is great for command-line interaction.

What are we backing up

Two things:

- Ghost

content/— which includes your site/blog content in JSON, member CSV export, themes, images, and some configuration files - MySQL database

Overview

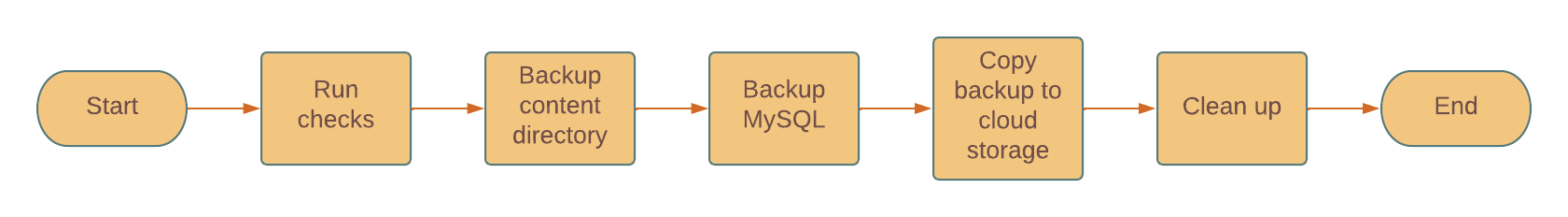

In this article, we’re going to write a simple Bash script that does all the following steps for us.

Assuming that we already have Rclone set up, here’s an overview of what our Bash script should cover:

- Optional: run requirement checks to ensure that the CLIs that we need are installed. E.g.

mysqldump,rclone, etc. - Back up the

content/folder where the Ghost blog posts are stored - Back up our MySQL database

- Copy the backup files over to our cloud storage (e.g. Dropbox) using Rclone

- Optional: clean up the generated backup files

Utility functions

Let’s create util.sh which contains a set of helper functions for our backup script.

I like having timestamps printed on my logs, so:

#!/bin/bash

log() {

echo "$(date -u): $1"

}

With this, we can now use log instead of echo to print text; with the timestamp using:

$ log 'Hola Jerry!'

Sun Jul 22 03:01:52 UTC 2022: Hola Jerry!

Next, we’ll create a utility function that helps to check if a command is installed:

# util.sh

# ...

check_command_installation() {

if ! command -v $1 &>/dev/null; then

log "$1 is not installed"

exit 0

fi

}

We can use this function in Step 1 to ensure that we have ghost, mysqldump, etc. installed before we start our backup process. If the CLI is not installed, we would just log and exit 0.

The backup script

In this section, we’ll create a backup.sh file as our main backup Bash script.

To keep our code organized, we break the steps in the overview into individual functions.

Before we begin, we’ll need to declare some variables and source our util.sh so that we can use the utility functions that we defined earlier:

#!/bin/bash

set -eu

source util.sh

TIMESTAMP=$(date +%Y-%m-%d-%H%M)

GHOST_DIR="/var/www/ghost/"

GHOST_MYSQL_BACKUP_FILENAME="backup-from-mysql-$TIMESTAMP.sql.gz"

REMOTE_BACKUP_LOCATION="Ghost Backups/"

backup.sh

Step 1: Run checks

- Check if the default

/var/www/ghostdirectory exists.ghostCLI can only be invoked within a folder where Ghost was installed - Check if the required CLIs to run our backup are installed

# backup.sh

# ...

# run checks

pre_backup_checks() {

if [ ! -d "$GHOST_DIR" ]; then

log "Ghost directory does not exist"

exit 0

fi

log "Running pre-backup checks"

cd $GHOST_DIR

cli=("expect" "gzip" "mysql" "mysqldump" "ghost" "rclone")

for c in "${cli[@]}"; do

check_command_installation "$c"

done

check_ghost_status

}Step 2: Backup the content directory

- Back up the

content/directory usingghost backupCLI - Here,

ghost backupis configured using anexpectscript as shown in this example.

# backup.sh

# ...

# backup Ghost content folder

backup_ghost_content() {

log "Running ghost backup..."

cd $GHOST_DIR

expect wraith.exp

}

Step 3: Backup MySQL database

- Fetch all the necessary database credentials (username, password, DB name) from the Ghost CLI

- Run a check to ensure that we can connect to our MySQL database using the credentials above

- Create a MySQL dump and compress it into a

.gzfile usingmysqldumpandgzip

# backup.sh

# ...

check_mysql_connection() {

log "Checking MySQL connection..."

if ! mysql -u"$mysql_user" -p"$mysql_password" -e ";" &>/dev/null; then

log "Could not connect to MySQL"

exit 0

fi

log "MySQL connection OK"

}

backup_mysql() {

log "Backing up MySQL database"

cd $GHOST_DIR

mysql_user=$(ghost config get database.connection.user | tail -n1)

mysql_password=$(ghost config get database.connection.password | tail -n1)

mysql_database=$(ghost config get database.connection.database | tail -n1)

check_mysql_connection

log "Dumping MySQL database..."

mysqldump -u"$mysql_user" -p"$mysql_password" "$mysql_database" --no-tablespaces | gzip >"$GHOST_MYSQL_BACKUP_FILENAME"

}

Step 4: Copying the compressed backup files to a cloud storage

# backup.sh

# ...

# `rclone` backup

# assumes that `rclone config` is configured

rclone_to_cloud_storage() {

log "Rclone backup..."

cd $GHOST_DIR

rclone_remote_name="remote" # TODO: parse from config or prompt

rclone copy backup-from-*-on-*.zip "$rclone_remote_name:$REMOTE_BACKUP_LOCATION"

rclone copy "$GHOST_MYSQL_BACKUP_FILENAME" "$rclone_remote_name:$REMOTE_BACKUP_LOCATION"

}Step 5: Clean up the backup files

# backup.sh

# ...

# clean up old backups

clean_up() {

log "Cleaning up backups..."

cd $GHOST_DIR

rm -rf backup/

rm -f backup-from-*-on-*.zip

rm -f "$GHOST_MYSQL_BACKUP_FILENAME"

}Finally, we shall invoke all of the functions defined for Steps 1 — 5.

# At the end of the backup.sh

# ...

# main entrypoint of the script

main() {

log "Welcome to wraith"

pre_backup_checks

clean_up

backup_ghost_content

backup_mysql

rclone_to_cloud_storage

clean_up

log "Completed backup to $REMOTE_BACKUP_LOCATION"

}

And… we’re done!

Just show me the final code

I hear you. Feel free to check out the source code:

To use this project directly

- SSH into your VPS where you host your Ghost site

- Utilize the

sudo -i -u ghost-mgrcommand to switch to theghost-mgruser, which is responsible for managing Ghost - Clone the repository onto your VPS

- Run

make setupand update the email and password fields in thecp-edwraith.exp - Run

./backup.shfrom thewraith/directory

Automating Backup With Cron

I despise doing manual maintenance and administrative tasks. Let’s schedule a regular backup for our Ghost site to ease our pain using Crontab:

- Run

crontab -e - For example, you can run a backup at 4 a.m every Monday with:

# m h dom mon dow command

0 4 * * 1 cd ~/wraith/ && USER=ghost-mgr bash backup.sh > /tmp/wraith.log

Restoring Ghost Backup

Backups are not backups unless you have tested restoring from them.

Let's test our backup locally using Docker.

- In a new directory, copy your

backup-from-vA.BB.Z-on-YYYY-MM-DD-HH-MM-SSbackup file there. Decompress the backup files usingunzip - Run Ghost locally using

docker run -d --name some-ghost -e url=http://localhost:3001 -p 3001:2368 -v /path/to/images:/var/lib/ghost/content/images ghostto restore the blog images - Visit

localhost:3001/ghostto create an admin account - From the Ghost Admin interface (

localhost:3001/ghost/#/settings/labs), import your JSON Ghost blog content from decompresseddata/ - You can import your members' CSV from the Members page too

bash within your Ghost Docker container using docker exec -it some-ghost bashClosing Thoughts

Whether you’re just running a simple personal website or a proper business, having a proper backup is critical.

I am guilty of procrastinating in setting up my backups. Today, I finally got that out of my to-do list.

"There 2 kinds of this world – people who back up their files and people who haven't experienced losing all their files yet."

Reading this kind of gave me that little push I needed to avoid becoming part of a cautionary tale.