4 Web Scraping Challenges To Look Out For

Today, with the help of numerous open-sourced tools, libraries, frameworks, and many more web scraping solutions, web scraping can seem easier than ever.

While that is true, it can be foolish for someone to not expect any challenges while trying to scrape data from the web.

For those who are wondering —

“Why scrape the web”?

Gathering data is incredibly crucial in today’s world. Through web scraping, businesses can gain competitive advantages such as:

- Lead generation

- Pricing advantage

- Competitor monitoring

- Market research

If you are looking to learn web scraping with Scrapy, I would highly recommend you to read this really well-written 5 parts end-to-end Scrapy tutorial.

In this article, I wish to share with you some of the challenges that I have faced after scraping hundreds of product pages.

Let’s begin!

Challenge 1: Ever-changing website structure

To provide a better user experience, websites are always subjected to structural and design changes.

In such a scenario, your scrapers might need to be regularly set up or changed according to web page changes, even in the case of minor changes.

While this is not necessarily a complex task to cope with, the time and resources you will be spending dealing with this can be exhausting.

Use Monitoring

To cope with this issue, constant monitoring and error reporting with services such as Sentry are required.

Another tip is to always keep your spiders’ design as robust as possible to deal with any potential quicks in your targeted websites.

Challenge 2: JavaScript rendered sites

As you scrape more websites, you will notice your spiders won't be able to reach your desired data by using merely selectors because the data on these sites are dynamically loaded.

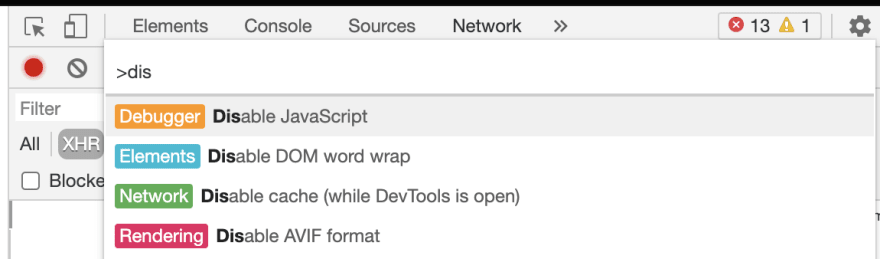

Disable JavaScript from the browser’s dev tool (Cmd/Ctrl + Shift + P)Scraping data from a website without server-side rendering often requires the execution of JavaScript code. Chances are you would need a scriptable headless browser such as Splash.

Headless Browser

A headless browser is a web browser without a graphical user interface.

If you are using Scrapy, check out this post on how you can scrape dynamically rendered sites

However, one should note that the use of any headless browsers would slow down the speed at which you can scrape a website.

Crawling Using REST API

Another solution here to be considered is to directly crawl the REST API responses instead of extracting data from the site’s raw HTML. Scraping directly from the website’s REST API also poses the advantage of returning cleaner and more structured data which are less likely to change.

Challenge 3: Anti-scraping countermeasures

If you have been scraping websites, it wouldn’t be uncommon to come across anti-scraping countermeasures.

Generally speaking, most smaller websites only have basic anti-scraping countermeasures such as banning IPs that are making excessive requests.

On the contrary, large websites such as Amazon, etc. would make use of more sophisticated anti-bot countermeasures which can make data extraction much more difficult.

Proxies

The first and foremost requirement for any web scraping product is to use proxy IPs.

Typically, rather than building your own proxy infrastructure, there are a large number of proxy services out there such as Zyte Smart Proxy Manager or ScraperAPI that can abstract all the complexities of managing your own proxies.

Tip: If you are starting out, I’d highly recommend you to check out ScraperAPI. Using it is as easy as pasting in the URL that you want to scrape inside their API endpoint’s query parameter. Check out their pricing here.

Challenge 4: Legal concerns

Web scraping is not illegal by all means, the usage of the data extracted could be restricted.

Before you start your journey to web scraping, I would highly recommend you to read this blog post as legal issues in web scraping can pose a very delicate challenge.

Final Thoughts

As you can see, web scraping has its own unique set of challenges. Hopefully, this article has made you more aware of the challenges involved in web scraping and how you can go about solving them.

Thank you for reading and have a wonderful day!