5 Useful Tips While Working With Python Scrapy

Today, there are a handful of Python Scrapy tutorials out there. However, I find that most of the tutorials do not cover these low-hanging fruits that can greatly improve the developer experience of web scraping.

In this post, I will be sharing with you some quick and easy tips that you can use when working with Scrapy.

These tips will greatly benefit you as a developer while some tricks here can even help to minimize the load to the websites that you want to scrape from.

TL;DR

- Use HTTPCache during development

- Always use AutoThrottle

- Consume sites’ API whenever available

- Use bulk insert for database write operation in item pipelines

- Wrap your target URL with a proxy (e.g. ScraperAPI)

- Bonus: Colorized logging

Without further ado, let’s get started!

Use HTTPCache

While developing spiders (crawlers), we often check if our spiders are working or not by hitting the web server multiple times for each test.

As our development work is an iterative process, we would indirectly increase the server load for the site that we are scraping.

To avoid such behavior, Scrapy provides a built-in middleware called HttpCacheMiddleware which caches every request made by our spiders along with the related response.

Example

To enable this, simply add the code below to your Scrapy project’s settings.py

# Enable and configure HTTP caching (disabled by default)

HTTPCACHE_ENABLED = TrueUltimately, this is a win-win scenario — our tests will now be much faster while not bombarding the site with requests while testing out.

NOTE: Do remember to configure HTTPCACHE_EXPIRATION_SECS in production.AutoThrottle

“The first rule of web scraping — do no harm to the website.”

Typically to reduce the load on the websites that we are scraping, we would configure DOWNLOAD_DELAY in our settings.py.

However, as the capability to handle requests can vary across different websites, we can automatically adjust the crawling speed to an optimum level using Scrapy’s extension — AutoThrottle.

AutoThrottle would adjust the delays between requests according to the web server’s load using the calculated latency from a single request.

The delay between requests will be adjusted in such a way that it will not be more than the AUTOTHROTTLE_TARGET_CONCURRENCY.

Example

To enable autothrottle, just include this in your project’s settings.py:

# Check out the available settings that this extension provide here!

# AUTOTHROTTLE_ENABLED (disabled by default)

AUTOTHROTTLE_ENABLED = TrueNot only that, but you can also reduce the chances of getting blocked by the website! As simple as that.

Use the Site’s API

One of the key challenges to look out for while scraping the web is when the data on the sites are dynamically loaded. While starting, I would only naturally consider using the site’s HTTP API whenever I ran into this particular issue. Not ideal.

Today, many websites have HTTP APIs available for third parties to consume their data without having to scrape the web pages. It is one of the best practices to always use the site’s API whenever available.

Moreover, scraping data directly via the site’s API has a lot of advantages as the data returned are more structured and less likely to change. Another bonus point here is that we can avoid having to deal with those pesky HTML.

Bulk (batch) Insert to Database

As you might already know, we can store the scrapped items in our database using Scrapy’s item pipelines.

For starters, we can easily write a single row of data for each item that we scraped from a website.

As we start to scale up by scraping multiple sites with thousands or more items concurrently, we will soon run into issues with our database write.

To cope with that, we shall use bulk insert (saving).

Example

Here, I am going to provide an example of how you use bulk insert with SQLAlchemy in your Scrapy pipeline:

import logging

from scrapy import Spider

from sqlalchemy.orm import sessionmaker

from example.items import ProductItem

from example.models import Price, Product, create_table, db_connect

logger = logging.getLogger(__name__)

class ExampleScrapyPipeline:

"""

An example pipeline that saves new products and their prices into database

"""

def __init__(self):

"""

Initializes database connection and sessionmaker

"""

engine = db_connect()

create_table(engine)

self.Session = sessionmaker(bind=engine)

self.products = []

self.prices = []

def process_item(self, item: ProductItem, spider: Spider) -> ProductItem:

"""

This method is called for every item pipeline component

We save each product as a dict in `self.products` list so that it later be used for bulk saving

"""

product = dict(

name=item['name'],

vendor=item['vendor'],

quantity=item['quantity'],

url=item['url'],

)

self.products.append(product)

self.prices.append(item['price'].amount)

return item

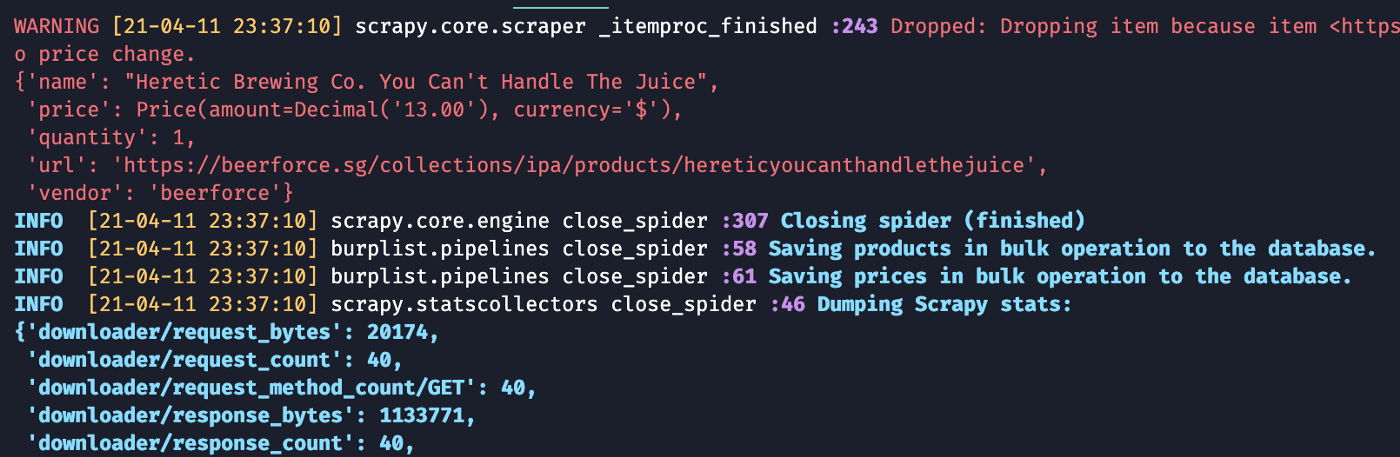

def close_spider(self, spider: Spider) -> None:

"""

Saving all the scraped products and prices in bulk on spider close event

Sadly we currently have to use `return_defaults=True` while bulk saving Product objects which greatly reduces the performance gains of the bulk operation

Though prices do get inserted to the DB in bulk

Reference: https://stackoverflow.com/questions/36386359/sqlalchemy-bulk-insert-with-one-to-one-relation

"""

session = self.Session()

try:

logger.info('Saving products in bulk operation to the database.')

session.bulk_insert_mappings(Product, self.products, return_defaults=True) # Set `return_defaults=True` so that PK (inserted one at a time) value is available for FK usage at another table

logger.info('Saving prices in bulk operation to the database.')

prices = [dict(price=price, product_id=product['id']) for product, price in zip(self.products, self.prices)]

session.bulk_insert_mappings(Price, prices)

session.commit()

except Exception as error:

logger.exception(error, extra=dict(spider=spider))

session.rollback()

raise

finally:

session.close()TL;DR: We save each scrapped item as a dictionary into a simple list inside __init__ and bulk insert them when close_spider is called. (source code)

bulk_insert_mappings instead of bulk_save_objects here as it accepts lists of plain Python dictionaries which results in less overhead associated with instantiating mapped objects and assigning state to them, hence it’s faster. Check out the comparison here.The code example above showcases the scenario where you are dealing with Foreign Keys (FK). If the rows to be inserted only refer to a single table, then there is no reason to set return_defaults as True(source).

In short, bulk insert is a lot more efficient and faster than row-by-row operations. You can use bulk insert to insert millions of rows in a very short time.

Caveat

One of the challenges of using bulk inserts is that if you try to add too many records at once, the database may lock the table for the duration of the operation. So depending on your application’s need, you might want to consider reducing the size of your batch (i.e. commit once for 10,000 items).

Using Proxy API

While scraping large e-commerce sites such as Amazon, you’ll often find yourself in the need to use proxy services. Instead of building our own proxy infrastructure, the easiest way to do so is to use a proxy API such as Scraper API.

Let’s create a utility function that takes in a URL and turns it into a Scraper API URL:

import logging

from urllib.parse import urlencode

from scrapy.utils.project import get_project_settings

logger = logging.getLogger(__name__)

settings = get_project_settings()

def get_proxy_url(url: str) -> str:

"""

We send all our requests to https://www.scraperapi.com/ API endpoint in order use their proxy servers

This function converts regular URL to Scaper API's proxy URL

"""

scraper_api_key = settings.get('SCRAPER_API_KEY')

if not scraper_api_key:

logger.warning('Scraper API key not set.', extra=dict(url=url))

return url

proxied_url = 'http://api.scraperapi.com/?' + urlencode({'api_key': scraper_api_key, 'url': url})

logger.info(f'Scraping using Scraper API. URL <{url}>.')

return proxied_urlA utility function that converts your target URL to Scraper API’s proxy URL

To use it, simply wrap the URL that you want to scrape with our newly created get_proxy_url function.

# Example in your spider.py

from example.utils import get_proxy_url

def start_requests(self):

url = "https://ecommerce.example.com/products"

yield scrapy.Request(url=get_proxy_url(url), callback=self.parse)

In my opinion, using Scraper API was a breeze and their free tier allows you to scrape 1,000 web pages per month. Check out their pricing here.

Bonus: Colorized Logging

“How can I have logs that are conveniently conspicuous based on color?”

While developing my Scrapy projects, I often found myself asking that same question over and over again. Introducing colorlog package, a package that does exactly that!

After installing the package into your virtual environment, simply add the following code below to your settings.py to enable colorized logging:

import copy

from colorlog import ColoredFormatter

import scrapy.utils.log

color_formatter = ColoredFormatter(

(

'%(log_color)s%(levelname)-5s%(reset)s '

'%(yellow)s[%(asctime)s]%(reset)s'

'%(white)s %(name)s %(funcName)s %(bold_purple)s:%(lineno)d%(reset)s '

'%(log_color)s%(message)s%(reset)s'

),

datefmt='%y-%m-%d %H:%M:%S',

log_colors={

'DEBUG': 'blue',

'INFO': 'bold_cyan',

'WARNING': 'red',

'ERROR': 'bg_bold_red',

'CRITICAL': 'red,bg_white',

}

)

_get_handler = copy.copy(scrapy.utils.log._get_handler)

def _get_handler_custom(*args, **kwargs):

handler = _get_handler(*args, **kwargs)

handler.setFormatter(color_formatter)

return handler

scrapy.utils.log._get_handler = _get_handler_custom

Wrap Up

In short, you learned about how you can easily avoid bombarding the websites that you want to scrape by using HTTPCACHE_ENABLED and also AUTOTHROTTLE_ENABLED. We must do our part to ensure that our spiders are as non-invasive as possible.

On top of that, we can easily reduce the write operations to our database easily by incorporating bulk inserts in our item pipelines.

That is all I have today and I hope you learned a thing or two from this post!

Cheers, and happy web scraping!